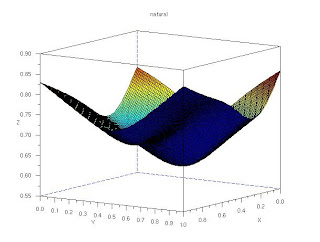

Below is an image of a "grid" capiz window. Notice that the image has a barrel distortion effect. The square grids located at the edges are much smaller than those found at the center. The center appears to be bloated while the sides are pinched. These are due to the "imperfect" lens of the camera that captured the image.

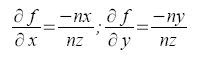

Our goal is to correct this distortion. We use the center square grid as our reference since it is less distorted. We then determine the transformation matrix that caused the barrel effect. Let f(x,y) be the coordinates of the ideal image while g(x',y') are the coordinates of the the distorted image. To determine the transformation matrix C, we map the coordinates of the ideal image in the distorted image.

We then compute for the transformation matrix C using:

Now that we have determined the transformation matrix, we just simply copy the graylevel v(x',y') of the pixel located in g(x',y') into f(x,y). But since the calculated g(x',y') coordinates are real (pixel coordinates should be integral), we use bilinear interpolation. The graylevel of an arbitrary point is determined by the graylevel of the 4 nearest pixels encompassing that point.

We can no solve for the graylevel using:

For the remaining blank pixels in the ideal image, again we use interpolation of the four nearest corners to determine its graylevel. Below is a comparison of the original distorted image and the enhanced(ideal) image. Notice that at the lower left level, the size of the square grid for the enhanced image increased. The grid lines also become more parallel. The resulting image has lessen the effect of the distortion. The image is no more bloated.

Original distorted image

Enhanced ideal image

I think I've performed the activity successfully. The distorted image was enhanced. I want to give myself a 10.